The Ethical Dilemma of AI Agents: A Looming Challenge

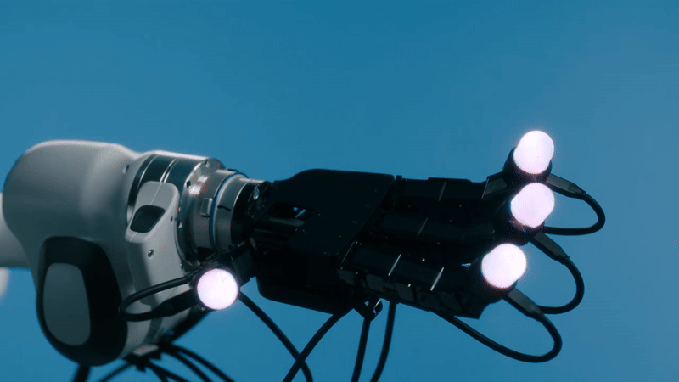

Imagine this: AI not only mimicking our personalities but also acting on our behalf. It’s a future that’s closer than we think, and it brings a host of ethical questions we need to address now. Generative AI models have made significant strides in conversing with us and creating content, but they’re still limited in performing tasks for us. Enter AI agents—AI models with a mission.

These agents come in two types: tool-based and simulation. Tool-based agents can be instructed using natural language to complete digital tasks, like filling out forms or navigating web pages. Anthropic and Salesforce have already released such agents, with OpenAI planning to follow suit. Simulation agents, on the other hand, are designed to behave like humans. Originally developed by social scientists for studies that were impractical or unethical with real subjects, these agents have evolved rapidly.

A recent paper by Joon Sung Park and colleagues showcased AI agents that could replicate the values and preferences of 1,000 interview participants with remarkable accuracy. This convergence of tool-based and simulation agents means we’re heading towards AI that can both mimic our personalities and act on our behalf. Companies like Tavus are already working on creating ‘digital twins’ and envision AI agents as therapists, doctors, and teachers. While this technology promises convenience, it also raises serious ethical concerns. Deepfakes could become even more personal and harmful if AI can replicate someone’s voice, preferences, and personality. Moreover, should people know if they’re interacting with an AI agent or a human? These questions might seem distant, but they’re not. As AI agents become more integrated into our lives, the ethical dilemmas will only become more pressing. It’s time to start grappling with these issues before they become unmanageable.